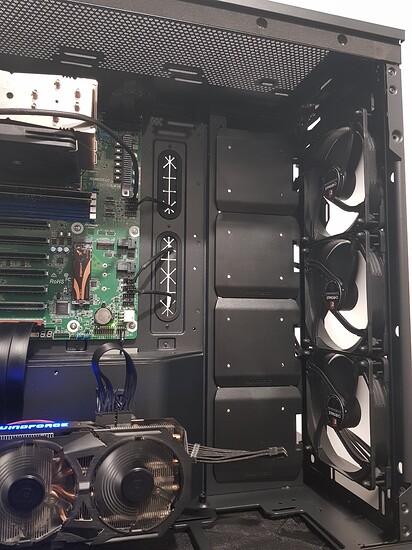

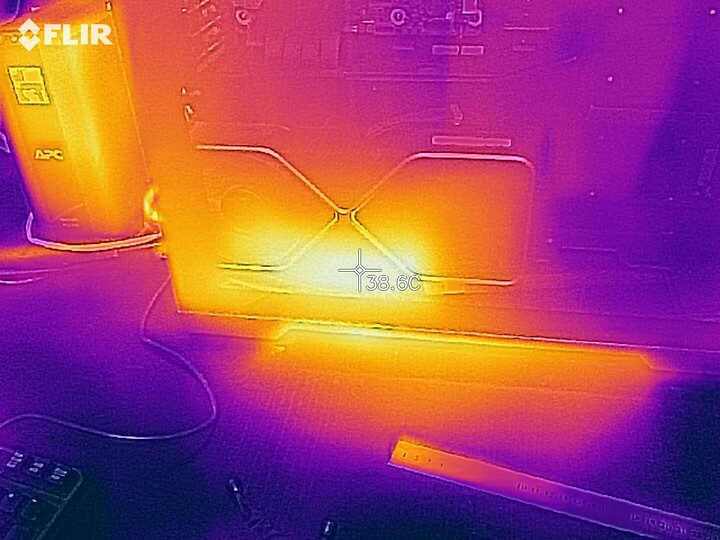

More thermal camera fun… I finally swapped the GPU’s between my current workstation and this EPYC tentative workstation. Now, I think it’s common knowledge that the RTX 3090 FE has a good cooler and honestly I don’t have any complaints, but it does run hot and it is a bit scary. You will definitely burn your fingers if you touch it in the wrong place while it’s running.

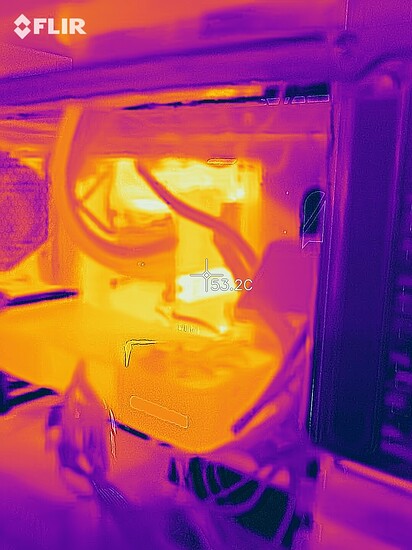

Here’s what it looks like a couple minutes after I pulled it out of my X99, where it was only showing the desktop and no GPU-accelerated software was running :

(room temperature is 18 °C)

Keep in mind, its fans do not start until it runs something harder than Chrome and Office.

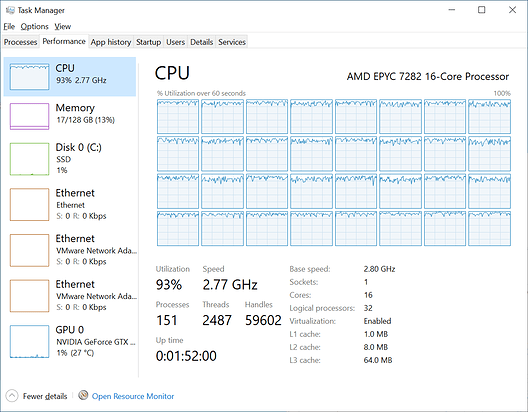

And now it’s in. You really need to install all three bracket screws, this board is way too heavy otherwise. It tilts into the PCIe socket something scary. What you’re seeing on the left is the UPS showing idle power draw from the wall and yes that’s only 63 W for the whole system. It varies between 60 and 75 W, no clear average, call it 67.5 W.

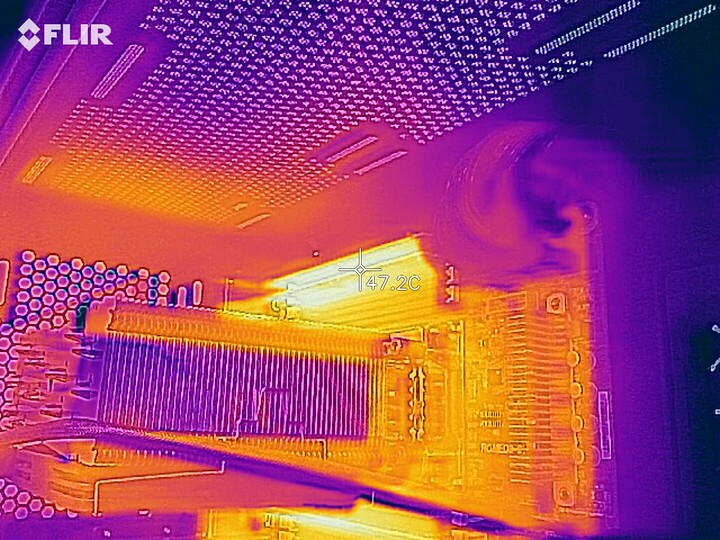

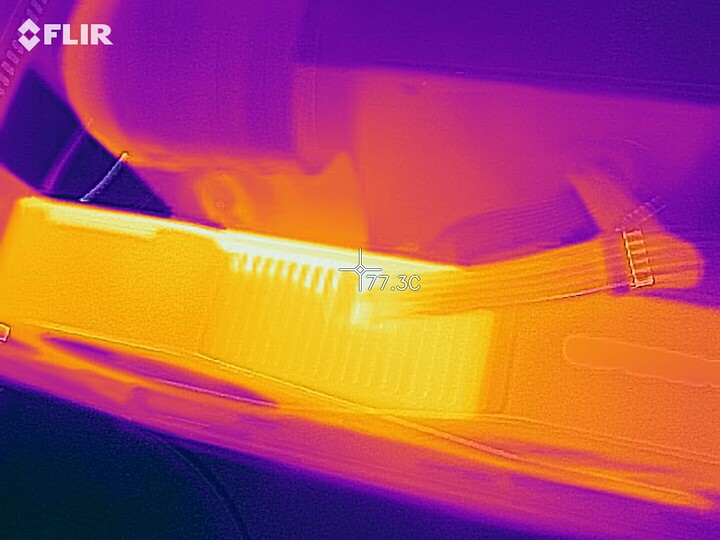

Now for the real crazy stuff. I launched Cyberpunk 2077 and set all graphics options to the maximum (aptly-named “Psycho” in some cases). RTX ON, of course. Looks perfectly lovely. Now the UPS reads 455 W and here’s what it looks like on thermal :

If anyone still wondered why I chose to install that GPU well away from the motherboard…

What’s interesting is that NVIDIA clearly prioritized silence : this GPU is clearly operating at max TDP (from previous tests in this thread we know this machine rarely uses more than 100 W, so the additional 350 are all for the GPU). Yet the fans barely make any noise and the airflow is a very gentle breeze.

This leads to some… uncomfortably high temperatures :

60 °C at the front, 80 °C at the back. This board should come with several “warning : hot surface” labels.

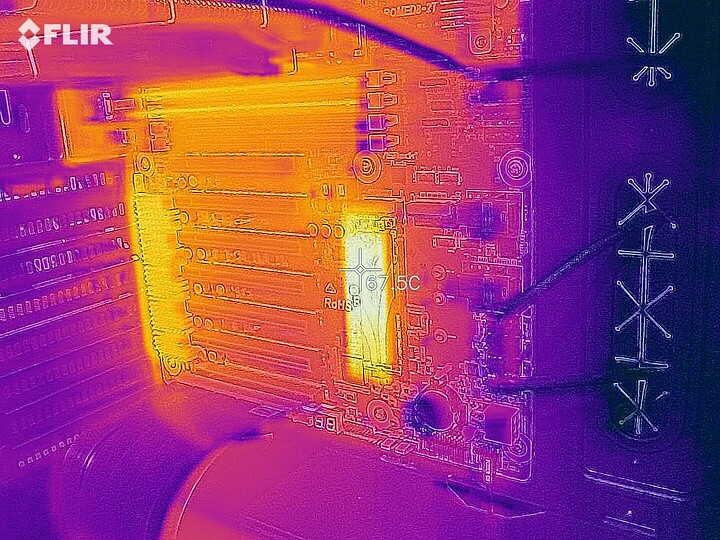

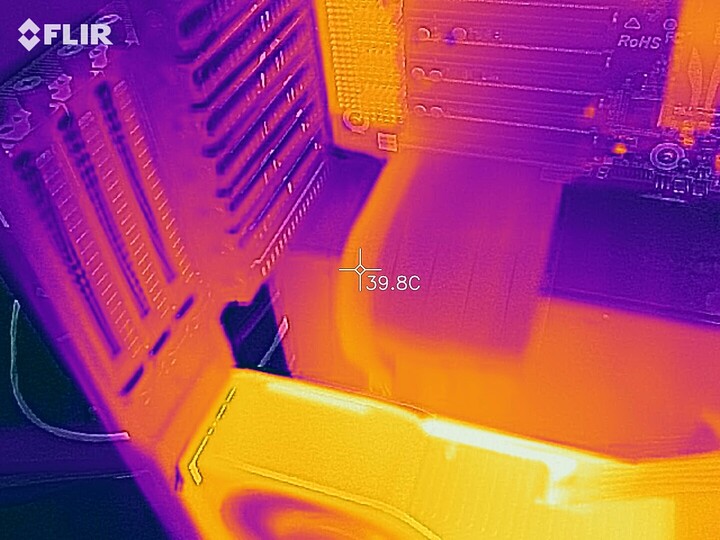

And you can tell it’s really sucking in the watts just by looking at the PCIe extension :

The first ribbon is regular cables and carries the 75 W of power a PCIe slot can provide. All the other ribbons are data. That power ribbon does feel warm to the touch. Remember that LTT video where they strung three meters of PCIe extensions and it worked ? Well, it probably wouldn’t have with a 3090.

Other than that, I’m pleased to confirm that the 3090 runs without issue on EPYC with Windows 10.

Oh yeah, I’d like to add : while this GPU occupies 3 slots, its actual thickness is 2.5 slots. That, combined with the low airflow required by its fans, means it should be safe to run several of these right next to each other. I’d make sure to have some good case airflow, though.