I don’t know why you’d consider Rome at all now unless you could get a really cheap used one (I consider the £170 I paid for an 8-core 7262 with 128MB of L3 a bargain) or you need a SKU with fewer cores than the Milan’s start at. Or I suppose you need a CPU in a real hurry…

@Nefastor to my understanding, the limit is not in the standards or the protocol stack, but between the CPU and Ethernet adapter. When 10GbE emerged in the early 2000s, no CPU was fast enough to keep up with the interrupt rate. So the hardware makers got clever (I think Sun was first). The 10GbE Ethernet adapters present to the CPU as one virtual (in hardware) Ethernet adapter per CPU. This spreads the interrupts across the CPUs, and reduces the rate to a manageable level.

I can see this with the Intel NICs that I have at present (X550, X710, X720(?)).

You can override this behavior on the Intel NICs (at least) if you do not want all your CPUs servicing Ethernet interrupts.

The joker here is that for a single socket/connection, all the interrupts for that connection seem to stay on one CPU, so we are bottlenecked by the interrupt rate.

(Again, for the datacenter folk, this is a non-issue, and could count as desirable.)

I went through are great deal of trouble on a prior project to grease a single-connection transfer (used Linux native AIO, large buffers, and aligned everything). Got up to ~~500MB/s transfer rates, as I recall.

Later (for another project) it occurred to me to look for other folk who had solved the problem. Found there was a great deal of work that went into SMB3 (and thus into Windows, Linux, and Samba). Looks like this landed in the early 2010s, so your bump in Windows file transfer rate could easily have been due to a Windows update (and SMB3).

When testing with older NICs (on either end), for a single file transfer I could see several Linux kernel worker threads very active. Under the hood, that single transfer was spread across several connections, and used the full 10Gbps rate.

There are also improvements at the Ethernet adapter level, if you have newer hardware on both ends. With newer NICs on both ends and current software (Centos 8 / Samba), the load drops a lot. (Looked only briefly, as my application met and exceeded requirements.)

Also, while I do not live in France, I too have had gigabit internet for several years.

Note that none of the above applies to mere 1GbE.

@KeithMyers note that the Thermaltake P3 is an open-sided case (suitable for a test rig for hardware folk), and lacks the usual flow-through airflow of even a desktop case. Thus my concern. Thinking of mounting a single fan just to generally push airflow over the motherboard.

There are some finned power(?) converters at the back center of the motherboard that in still air are getting just over 70C. That drops quite a lot with a little airflow.

@Preston_Bannister Yes, that would be a concern I suppose.

I always cram 10lbs of stuff into even large cases and that is a squeeze, so I engineer for lots of air movement and volume exchange. Noise concerns be damned.

Whether the existing air flow is just good to begin with or I just don’t max out the 1GB LAN cpu on my EPYCD8 mobo, I see 40° C. for both the motherboard temp and card side temp. I don’t have a LAN interface temp in the IPMI interface so guessing card side temp is the closest. And that temp is with 4 gpus sitting over the LAN chip.

Even with the Aquantia 10Gbe chip on the TR board I stay in the 40°’s with the same 4 gpus covering up the heatsink.

Hello,

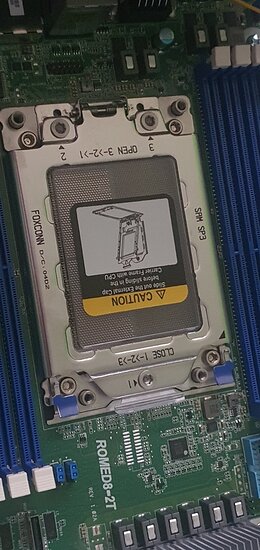

I would like to make a server for a home lab. The CPU will be 7313p. I plan to use ESXi 7. What’s the best choice for this SuperMicro H12SSL-NT-B or ASRock ROMED8-2T?

It is my understanding that these boards are not officially supported by VMware.

If they’re not supported will the on board 10G networking work?

I’m a network novice . . . . but why do you need special drivers for 10Gbe?

I didn’t have to get any special kind of 10Gbe drivers for either of my 10GBe capable motherboards. The OS standard drivers work fine.

Aquantia AQC107 chipset and Intel x550 chipset.

You don’t really it’s just that gigabit ethernet has been around for so long now even your toaster has drivers for it.

Many 10g and higher cards have drivers already in both Linux and windows. Mellanox and Chelsio cards should work right away, for example.

However there are lesser known cards that may need drivers installed.

Also, if you wish to take advantage of the special features/tuning/management these cards, then you may need the specific drivers for that card.

You don’t need ‘special’ drivers for 10GbE but you do need the drivers for that ethernet chipset. As akv8 said they were planning to run ESXi, they would need support in the hypervisor to bridge those host-ports over to the VMs, that what my question about ‘motherboard support’ was about.

I imagine you could just use PCIe pass-through to send the 10G NICs straight to the VMs, but then you’d need another network to run your VSAN/management on.

I run Proxmox VE myself, which is based on Debian, so fairly easy for me to go find missing drivers and patch the host, but my limited experience with EXSi when EPYC Naples/HS-11 MB’s were new was far from pleasant. It wouldn’t even boot, and patching EXSi was non-trivial to a new user like me.

Just seen a 7443P advertised as in stock in the UK at Scan but I’ve seen it cheaper elsewhere.

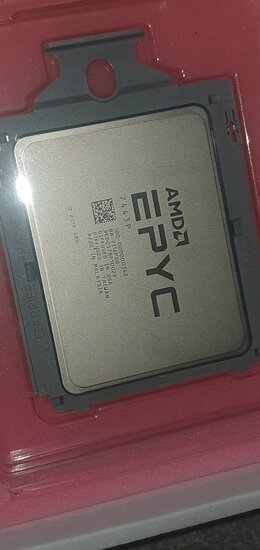

I got my ROMED8-2T from Amazon used-like new for a good price the box is just damaged a bit so they sell it as used. I might still return it to get the new one from the distributor just in time when they hit new batch from AsRock - but this one looks new - I am waiting for the Nocuta cooler and ram and I can try it. How do I post pictures in here I can’t figure it out ?

The reply windows has a help prompt that pops right up at first,

drag or paste images right here.

Or use an external imaging hosting site and post the link using the editors tools.

Nice. Lots of room in there. What is your cooling going to be?

Good choice for your 4U chassis. Should work well. STH review was with a TR 1950X and probably was right around 4Ghz where your 7443P will be running and kept temps at 68° C. under AIDA64 stress test. Only issue is its incorrect bottom-top fan orientation for a servers normal front-back fan airflow. Your P/S location will help with that little issue.

Yes you are correct about that, but I could not find a good solution that has this big of a fan others I fear might be too loud for a workstation? I will see if i can somehow remount the fans to blow front to back but I think it would be worse because it was nit designed this way.

Has anyone been able to get any of the 7003s shipped to the US? They seem to be plentiful in Europe but are nowhere in the US.

Hey Nefastor,

Do you know if its possible to get this mounting part only - I am thinking of adding another 4U case and run riser cables from a opening I would cut on the top of this case and into another and this mounting part would be great