Hey guys. I hope you’re all doing well. I’ve been off the forum for a few days. Here’s what happened : I ordered a Core i7-6850K on eBay to replace the 5820K on my aging workstation. I say “aging” but it’s still plenty capable… it had one issue though : you may remember that while socket 2011-3 processors normally have 40 PCIe lanes, the very first and cheapest one, the 5820K, only has 28.

Back when I bought it, that wasn’t an issue : NVMe was still unobtanium and the only PCIe card in the system was a GPU. Years later I added an NVMe drive and everything was still OK.

And then I added a 10 Gb NIC, and promptly ran out of PCIe lanes. Basically, I could not get more than 350 MB/s over Ethernet when it should have been 1 GB/s. That’s really the main reason I eventually embarked on building an EPYC workstation. But I knew I could buy a second-hand Core i7 and bring my old machine up to my new standards. So I did. It cost me only 134 € and I even get a nice 300 MHz clock bump in the process.

With that out of the way, I’ve decided to use my EPYC for what it’s meant to do. I present to you my latest baby :

That’s a 25U rack with, top to bottom :

- A 1.55 KVA Eaton UPS (just 1U, a bit pricey but it’s really nice)

- Empty space for a 4U rack (future backup server)

- The EPYC 7282 / ROMED8-2T in a 24-drive 4U chassis

- Four NetApp DS4246 disk shelves (thank you eBay)

It’s very sparsely populated for now, but the whole idea was to build “the only file server I would ever need”. I don’t like wasting time every few years building entirely new infrastructure. Now I won’t have to. This thing has 108 TB of storage in the “main” array (8 EXOS X18) and two of the disk shelves are filled with almost every 3.5’’ HDD I’ve bought for the last 10 years, as one giant backup pool. So that’s 56 drives with room for 120 drives total.

I changed the jumper settings on the motherboard to use both M.2 sockets, and used them for the system pool (a pair of cheap 128 GB sticks in mirror). The drives are controlled by two 16-port SAS 6 Gb HBA’s (LSI 9201-16i, again, a nice eBay find) :

- 24 ports are directly connected to the server chassis’ backplanes

- 8 ports go to bulkhead passthroughs and from there to the disk shelves

Here’s what that looks like :

Of course I’ve had to replace the huge Noctua heatsink by something less quiet but 4U-compatible. It turned out to be a very nicely built unit. I could replace the fan, it’s a standard 92 cm but it’s less noisy than the chassis fans already.

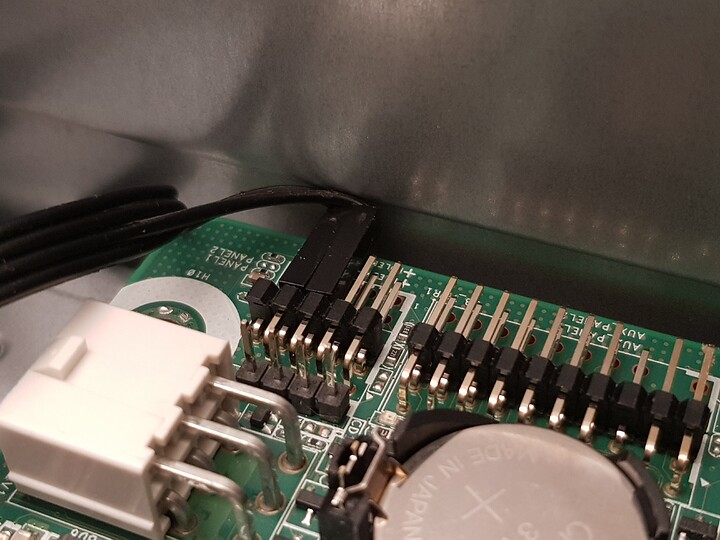

There’s one caveat I’d like to point : due to all its PCIe slots, Asrock has seen fit to use angled pin headers for the front panel connections. I knew that would be a problem in any 19’’ chassis. I’ve had to get creative, again :

The case’s front panel connectors use shells that are too long and simply can’t fit. However, the connectors are what the Chinese like to call “Dupont crimp connectors”. They are very popular with the maker crowd, and I have a metric ton of those. It’s the same connectors used on those popular jumper wires :

It turns out that on some versions of those wires, the single-pin shells are shorter than regular shells by a few millimeters. So I “modded” the case’s power switch connector and gained just enough length for the tight fit in my photo.

Not sure if this is a design flaw on Asrock’s part or if they expect anyone will just use IPMI to control the motherboard, but hey, if you ever face this sort of problem now you know a cheap solution that doesn’t involve modifying your expensive motherboard

As for my workstation… I still want a new machine. The 6850K upgrade is not a long-term solution. But I’m hoping it’ll take me until a Zen 3 ThreadRipper is announced, or, who knows, until Intel finally figures out how to 7 nm

I know this is a thread about workstations, but since many of us like to repurpose their old workstations into servers maybe I’m not off-topic here.

The NAS has only just been freshly setup. I intend to try all sorts of VM f*uckery in the very near future. I’ll keep you posted ! It might be in a NAS-specific thread, though, what do you think ?

- but I realize now that your new MB will not fit your Naples anyway.

- but I realize now that your new MB will not fit your Naples anyway.

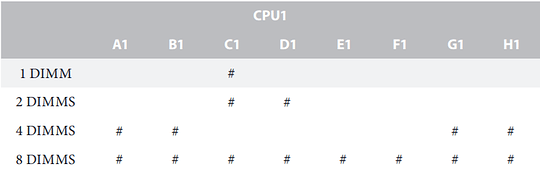

(just kidding). Here’s the info from the manual :

(just kidding). Here’s the info from the manual :