The only difference is that V2 can’t talk with Tri-Mode HBAs at all. The V3 on the other hand can be connected to such a Tri-Mode HBA - BUT you can only use U.2 or U.3 NVMe SSDs, not SAS or SATA variants. “True” U.3 backplanes could be loaded with all types, since the SFF-8639 connector on the drives remains unchanged.

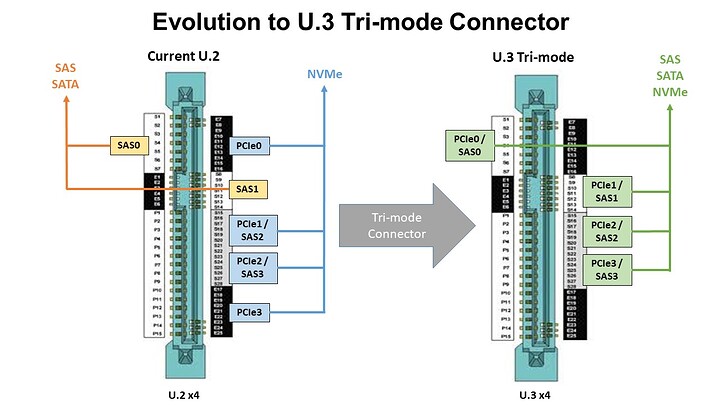

The pins are reassigned for different purposes. The biggest difference is that a U.3 host can support any choice of SATA, SAS, and PCIe NVMe SSDs.

Compatibility-wise, a U.3 PCIe NVMe SSD can be plugged into a U.2 host, and it can detect that and operate its pins in a U.2-compatible way. But a U.3 host cannot make such a determination when a U.2 device is plugged into it.

Source: Evolving Storage with SFF-TA-1001 (U.3) Universal Drive Bays

I think it’s a nice idea, but having read user complaints on this forum, I’m of the opinion that U.3 is not worth the trouble. You get the flexibility, but all the devices behave in a lowest-common-denominator way. For example, NVMe’s specialized features are hidden, and all you see is a plain block device.

Haven’t been able to find good u.2to u.3 either sadly too niche saw some made but no longer

Isn’t this lack of nvme features an issue with Universal Backplane Management controllers rather than U.3 drives themselves? I was under the impression U.2 is going away, it seems like all the new enterprise drives are being released as U.3 drives instead of U.2.

I decided to try and look up the specs. It looks like SATA/SAS/U.2/U.3 can all be supported because U.3 backplanes use a UBM chip which drive detection, mode switching and reporting that config to the tri-mode controller.

Here is an example of one:

The datasheet on TABLE 3-1 show multiple modes such as U.3 PCIe only mode and U.2 PCIe only mode.

My guess is that because U.3 drives are required to be backwards compatible with U.2, IcyDock simplifies things by only using the U.2 pinout. Making the pin reassignment a moot problem. I would also guess that only change to the V3 was the addition of some active/passive UBM implementation to please Tri-Mode controllers. In theory, Tri-Mode controllers have the right wiring to work with the V2 if you can figure out how to statically set the controller mode to U.2 PCIe only.

I don’t really care about 2.5" U.3 (SATA/SAS) support. My concern was whether it affects compatibility with a SFF-9402 wired breakout/retimer PCIe SlimSAS riser. I was also curious about whether the pin reassignment is an active chip based feature and whether the logic/chip lives on the backplane or host card.

I agree that U.3 SATA/SAS support just over-complicates the issue. I don’t really see the cost benefit for datacenters either. By the time you refit your 2.5" bays for full U.3 support surely it’s not even worth keeping your 2.5" SAS/SATA SSDs/HDDs. The Tri-Mode controllers alone are stupid expensive and as @aBav.Normie-Pleb said, you also loose the special features of NVMe through that interface.

On the otherhand I am quite excited about U.3 PCIe x1/x1 support for HDDs by using the old SATA/SAS only pins. It looks like in order to use future multi-actuator drives properly, SATA is a dead end.

I suspected @aBav.Normie-Pleb might’ve been the one to say it, but I couldn’t locate the post. This neverending story is just too long. lol

I can’t really say since I don’t own any U.3 hosts or devices. I’ve only read the literature about it.

That I’d like to see. I’ve been holding out getting anything U.3 for fear of losing U.2 compatibility.

A somewhat related question & comment.

I have tried slimsas to u.2 cable with both u.2 and u.3 drives. There is an measurable difference in speeds (gen3 and gen4 pcie in my test). This was all done on a Tyan s8030 with an epyc milan CPU.

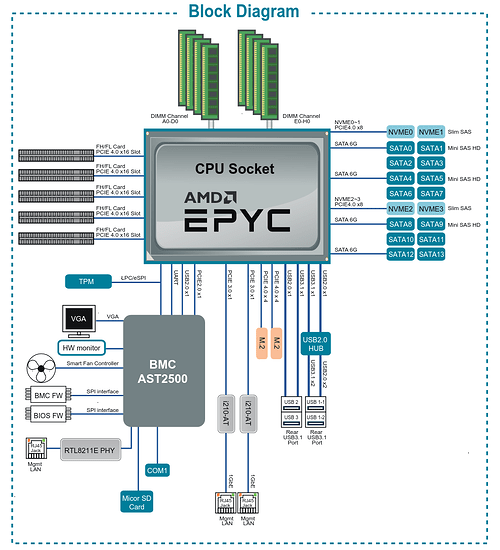

On the other hand, I also tried miniSAS to u.2 cable that is also supposed to support u.3 drives and supposed to be PCIE gen4. This is in the same mainboard that has 3 miniSAS ports. The u.3 (or u.2) drives are not even detected. No idea why. The third miniSAS port is used to plug 2 SATA HDDs and 1 sata SSD (working fine). The manual of the mainboard says that the miniSAS are connected to the sata controller of the CPU, is this why it doesn’t work with u.2 cables? should I use a PCIE card or m.2 adapter to get miniSAS that is compatible with u.2?

Thanks for any help or pointers.

Cheers!!

The manual for your board isn’t great, but contains the information:

The miniSAS ports only support SATA devices (4 each), the slimSAS connectors (2x) support two NVMe drives each at PCIe Gen4 speeds - provided you attach them with compatible cables.

According to the paperwork, it’s supported. It is really hard to find 3rd party cables that will work though. Try looking up the Broadcom part numbers for the cables listed in the documentation.

I found them after I looked a little harder. I believe they come in the U.3 flavor and require the drives to support SRIS. The earlier SlimSAS 8i pins would have had ePerst# pins for four devices, for a minimum of two lanes per device.

^ Speculations based purely on the literature of course. I’m in the middle of testing and just got confirmation that some cables are on their way from SerialCables.

Check your cables… Some are SAS only and others are PCIe only.

There’s another thread on here where it is confirmed that using Broadcom’s own official x8 U.3 cable isn’t working on their own cards for U.3 NVMe:

I have a feeling broadcom is only supporting 32 drives through the appropriate backplane.

To avoid disappointing anyone looking for it, I’m not attempting single-lane SSD connections. I’m testing dual port and the exotic Intel Optane H20 which has thus far evaded the best efforts of those trying to get it working on non-Intel systems. I’m going to be happy to get it finally working, although at the cost of over $1,500 so far, it’s already a foregone conclusion that it will not be economical even if it works; you’re better off getting better Optane and better NAND separately.

LOL did someone from the forum return a P411W-32P to Provantage? Picked up an open box one from them for $400 and it seems THIS forum is the most knowledgeable place on earth for this particular NVMe switch

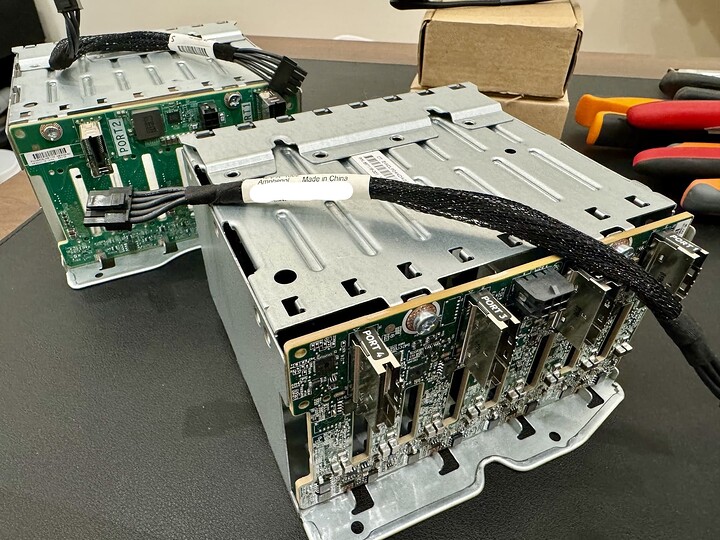

I am trying to get an HPE 826689-B21 NVMe 8x SFF drive cage to work. This is a 8x U.2 NVMe drive cage with a HPE specific power cable and 4 seemingly SlimSAS 8 / SFF-8654 ports.

(that’s a HPE 826691-B21 in the background, it’s a 8x SFF SAS/SATA cage, more on that later)

I’ve tried to make this work with a C-Payne PCIe 16x Re-Driver on both EPYC and Skylake motherboards and I’ve also tried to make this work with my newly acquired open-box P411W-32P switch - but the NVMe cage simply won’t work at all - it refuses to show any NVMe disk that is installed inside

Thanks to this forum, I checked the Broadcom switch and it was on firmware v4.1.3.1. Proceeded to downgrade the firmware to v4.1.2.1 to avoid the disappearing drives issue.

Windows 2022 actually shows all the correct devices in Device Manager for the NVMe switch, but I still cannot get the HPE drive cage to work. NVMe disks directly connected to both the C-Payne re-driver and the Broadcom switch work just fine.

Does anyone have experience with HPE NVMe cages? Looking at the NVMe riser kit that is supposed to drive these things, I don’t see any redriver or PCIe switch

It looks like the 4 SlimSAS 8i ports are directly taken from a custom dual PCIe 16x riser

… continued below…

… continued from above …

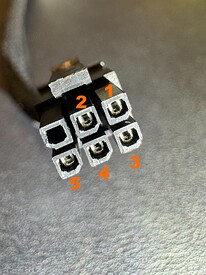

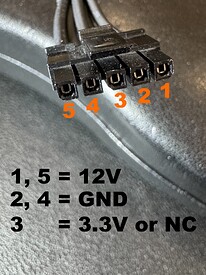

The power cable is definitely proprietary but folks have figured out the 12v and GND pinouts (Does NVMe need 3.3v or does it work fine with just 12V? )

The little 6 pin cable on one end looks like this

which corresponds to a 5 pin custom connecter to HP’s PSU backplane

Note that I am only using 12V and GND at the moment - the 3.3V isn’t connected to anything. I’ve used the same cable layout for a 826691-B21 cage which is 8x SFF SAS/SATA and it has worked fine (both the cages utilize the same power cable). I don’t have access to a HP DL380 Gen10 so cannot measure actual voltages from a proper environment

Honestly thought the HPE NVMe cage might be one of those new fangled UBM backplanes and hence would work with the Broadcom switch. It’s sad they don’t work because they’re cheap at $100 a pop second hand/refurbished

It would not at all surprise me if HP did something really strange like interleaving expected PCIe lanes to make the enclosure difficult to use with non-HPE hardware. If you could try running the cage with all the ports at x1 width and then saw drives being detected I think that would confirm the case.

I know M.2 NVMe exclusively runs off of 3.3v power, but U.2 NVME has it as optional and some drives don’t use it, but I think the majority do require it. Its also possible that there is a DC-DC converter on the backplane that produced 3.3v; I see a big inductor on the 826691-B21 cage which is usually a dead giveaway for a DC-DC converter.

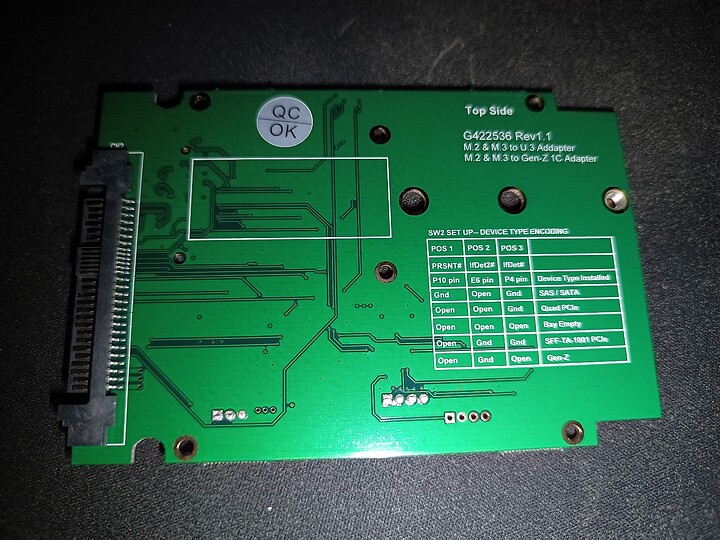

On a separate topic, I got one of the M.2/M.3 adapters that let me spoof different device encoding, like U.2, U.3, PCIe HDD and Gen-Z, I’m going to try and get this to work with PCIe 1x lane connection on my PCIe card (which I believe everyone was having trouble doing?):

I too, have this adapter. I’ve had it for about a month and have not gotten it working with even a plain quad-lane PCIe M.2 SSD yet though. Perhaps the switches are not meant to work out-of-box, but require specific tuning first.

What cables have you tried using with it? I’m planning on trying single lane U.3 cables first.

Yeah I noticed the position of the switches as it came don’t correlate with any type of useful device; I think by default it is configured as an “open bay”.

Another thing that confused me was the little orange covers over the configuration switches. I thought that they were there to prevent me from changing the switches at first until I realized thy are only there for the pick and place machine that assembles them to grab.

Will try that - what’s the best way to force the cage to NVMe at x1 ?