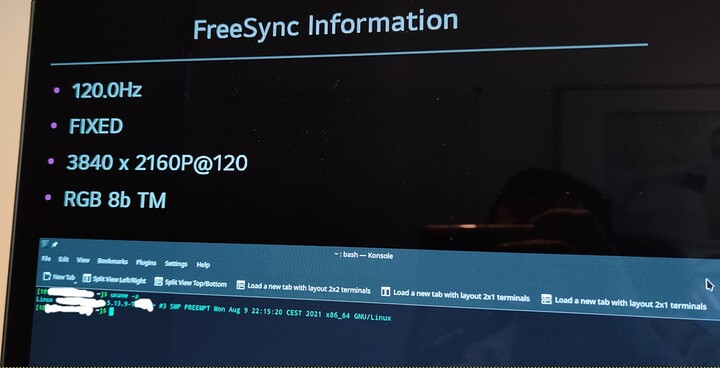

I’m currently using an LG CX 48" in Linux with 4k@120 and 8b RGB. I’m using an RX 6900 XT as GPU.

It took quite a lot of effort to get here. I tried the EDID route, but didn’t get beyond 4k@120 YCbCr 4:2:0, which is objectively a terrible experience. I also tried manually settings modes with xrandr, but that also lead nowhere.

I eventually concluded that the problem was in the kernel. My thinking was that it was somehow deciding on the ‘wrong’ maximum pixel clock, causing the AMD kernel driver to drop the 4k@>60Hz RGB modes that are advertised in the EDID (SVD 117 and 118). After a surprisingly short search through the AMD driver, I made a few small changes to ignore the max pixel clock sent by the monitor, and that bumped me up to 4k@120 8b RGB. 10b should be doable with a similar small change.

It’s not perfect: sometimes after logging in, the monitor shows ‘no signal’. I then have to restart the monitor to display an image. Audio over HDMI is also broken when using 120Hz.

But oh man, I can’t imagine using a computer at less than 120Hz anymore. This is still by far the best solution I could find.

I downloaded the kernel 5.13.9 source code

I made two changes:

In /drivers/gpu/drm/amd/display/amdgpu_dm/amdgpu_dm.c:adjust_colour_depth_from_display_info() on line 7168 I changed

if (normalized_clk <= info->max_tmds_clock) {

to

if (normalized_clk <= 12000000) {

In drivers/gpu/drm/amd/display/dc/dce/dce_link_encoder.c:dce110_link_encoder_validate_hdmi_output() on line 784 I changed

enc110->base.features.max_hdmi_pixel_clock))

to

(adjusted_pix_clk_khz > 12000000))

I compiled with this script:

sudo echo "need root"

make -j24

sudo make modules_install -j24

sudo make install

sudo rm /boot/vmlinuz-5.13-4k120-x86_64

sudo cp /boot/vmlinuz /boot/vmlinuz-5.13-4k120-x86_64

sudo mkinitcpio -p linux513patched

Reboot, select the new kernel, enjoy 4k120 in Linux using AMD 6xxx GPU

This is obviously an ugly solution btw. A more correct approach would be to use max(max_tmds_clock, max(pixel clock for each SVD advertised by the EDID)) as the maximum pixel clock instead of just the constant 12000000.

Even better would be actual HDMI 2.1 support, but hey, that seems to be off the table for now.