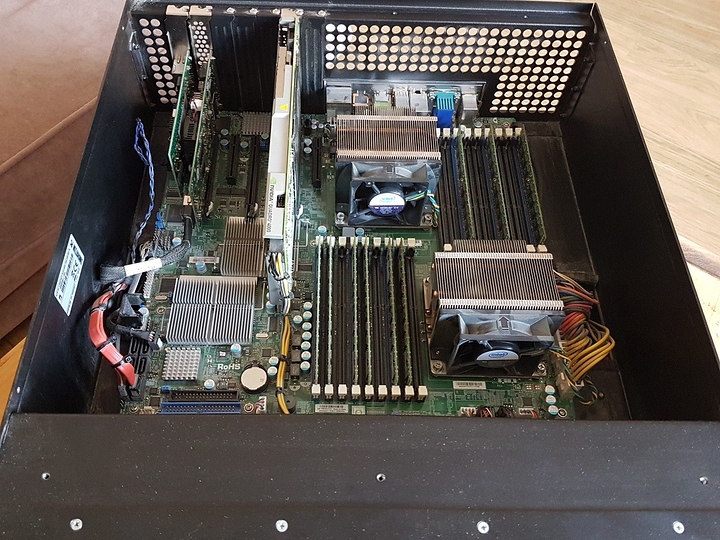

Thanks to ebay, and my inability to resist a bargain(£92!), I’ve recently acquired this:

It’s a dual xeon x5690, with 36GB(9x4GB) of Kingston 1.35V PC3-10600R (spec), all mounted on an EE-ATX Supermicro X8DAH±F Rev2.01 motherboard (manual).

There’s also an ARC-1212 4 port SAS/SATA raid card (manual), an Intel dual gigabit NIC, a Quadro 4000, and a huge PSU (something silly like 865W)

I’m being fairly exhaustive with the specs, because my questions are quite specific

The current box is both huge, tank-like in its construction, and exceedingly loud, so I intend to transplant the machine into an old Coolermaster Cosmos 1000 tower, and swap out the CPU HSFs with Hyper212 EVOs.

Besides a NAS, I don’t really know precisely what I’m going to use it for yet, so I’ve just stuck Windows 10 Pro on it (which seems to perfectly support all the hardware). So for now my queries are focused on the hardware side of things.

So here they are:

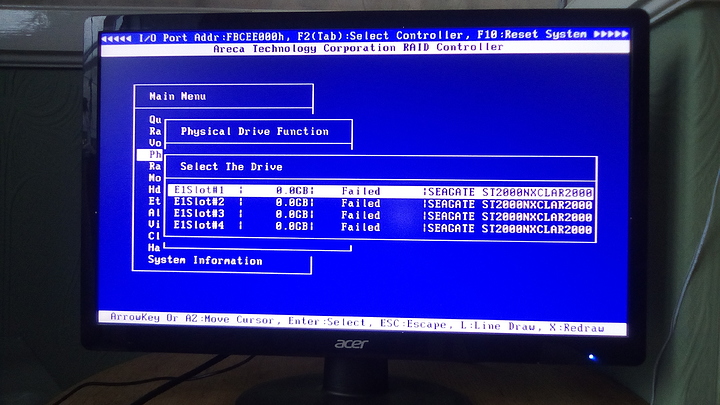

- The RAID controller.

a) The current cabling to the drive bays appears to be standard SATA cables, not SAS. Will the cables need replacing if I intend to attach SAS drives, or will a set of SATA to SAS adapters suffice?

b) Are there going to be any hideous incompatibilities with this old SAS raid controller? Should I restrict myself to a maximum size and/or type of drive to avoid problems?

- The Memory

The current configuration is 2 dimms per channel on CPU 1, and 1 dimm per channel on CPU 2.

According to the manual, the full 1333MHz is attainable on this CPU model, at 1.5V so long as only channels 1 & 2 are populated, and none of the dimms have more than 2 Ranks.

The motherboard appears to be quite smart about this; it’s currently running the 2 dimm per channel memory on CPU 1 at 1.5V, while the sparser memory on CPU 2 is only running at 1.35V. Thus maintaining 1333MHz.

a) Is running the memory of the different CPUs at different voltages intrinsically bad?

Should I pull out 12GB to bring both CPUs down to only 1 dimm per channel, and so allow 1.35V operation for both?

b) As there appears to be no mention in the manual of capacities affecting speed.

Does this mean I can put 3 8GB (1 or 2 rank) dimms in CPU 2’s open memory slots for a cool 60GB, without compromising speed? (With just a bump of CPU 2’s memory to 1.5V)

c) The manual warns against mixing voltages, but doesn’t say anything about cas latency or other timings. If I buy any more ram, do I need to precisely match what’s there already?

If so, what should match?

memory in the same channel positions? (A1/B1/C1),

or memory within each channel? (A1/A2/A3)

and what about memory across CPU banks?

-

The GPU.

Does the Quadro 4000 have any useful purpose?

I currently intend to just flog it, as speed-wise it seems lacklustre, though it is quite generously endowed with 2GB of VRAM.

I assume it must have a use, as it still commands a respectable price on ebay? -

I’m aware that I will have to drill a few mounting points to get an EE-ATX board properly supported in the Cosmos 1000 tower, but are there any other pitfalls I might not have considered in the transplant?

Thanks to any and all input!