Hi,

I got two of the Intel P4500 4 TB U.2 SSDs that have been recommended by @wendell in a few videos for potentially being a great deal when finding them used.

Both units have the most recent firmware installed (cp. Intel Memory And Storage Tool 1.0.5 and work fine with full expected performance if you’re connecting them via PCIe 3.0 x4 from the X570 chipset so I doubt I’ve purchased defective lemons.

On the other hand I got two Broadcom HBA 9400 8i8e controllers with the latest firmware and drivers installed (P14 package).

One of the HBAs is connected with PCIe 3.0 x8 via CPU, one with PCIe 3.0 x8 via the motherboard’s X570 chipset that is connected to a Zen 2 CPU (3900 PRO) via a PCIe 4.0 x4 link.

Nothing else is using the system (test setup), Windows 10 1909 was re-installed a few days ago on an erased SATA SSD, only using the lastest drivers and is up to Windows Update patch level 2020-04.

Broadcom HBA 9400 HBAs can directly connect to two NVMe SSDs on their internal SAS HD ports with a PCIe 3.0 x4 link to each drive.

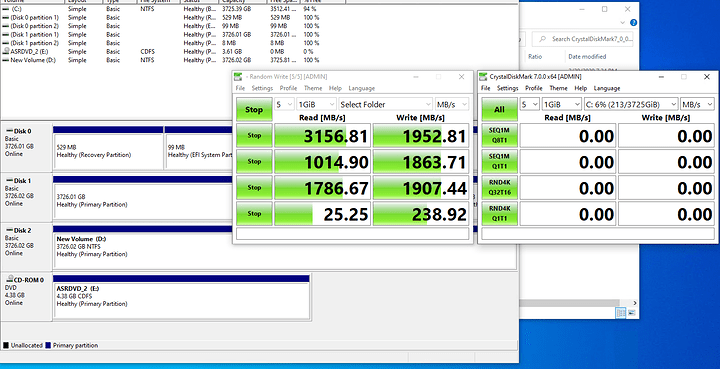

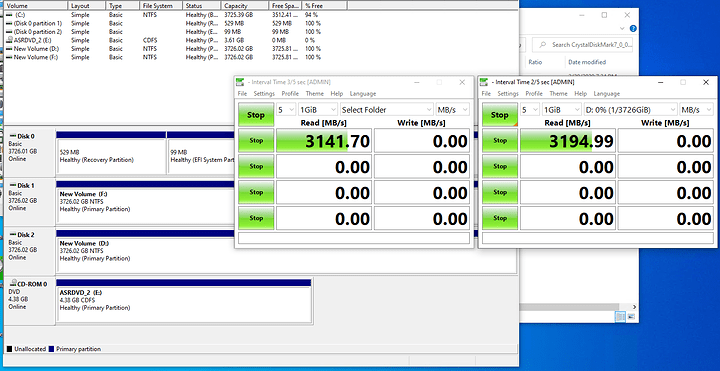

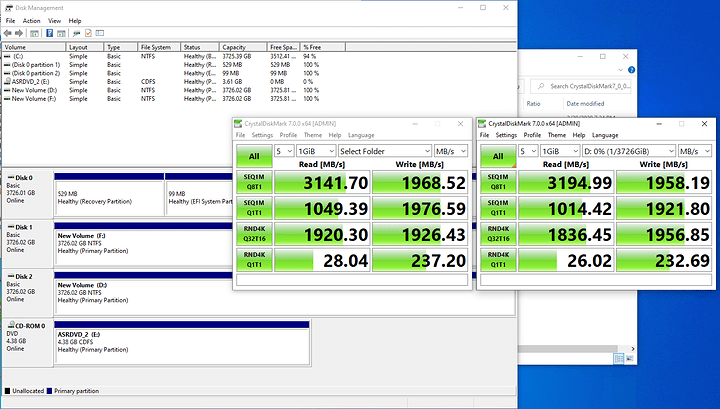

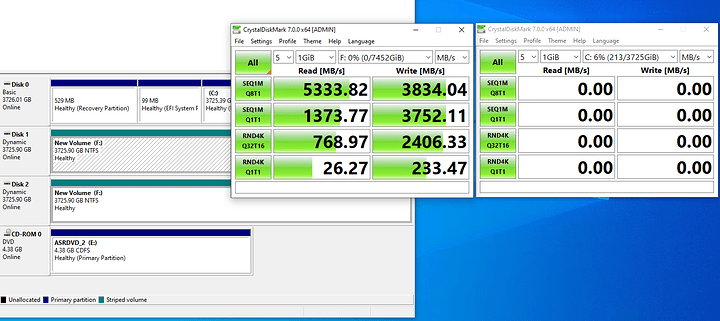

This works okay with a couple of Intel Optane drives (tested 905P 480 GB and DC P4801X 100 GB, a bit slower than directly connected to the motherboard, but not that bad), but when connecting a P4500 the sequential speeds are abyssmal (via HBA 400-600 MB/s read, 1200-1300 MB/s write; directly via motherboard: ca. 3300 MB/s read, ca. 2000 MB/s write).

I’ve done various CrystalDiskMark tests and A-B tested the HBAs, U.2 cables and SSDs.

I doubt that there is a hardware defect since…

-

Both HBAs and P4500 SSDs show similar results

-

It doesn’t matter if a HBA is connected via CPU or chipset PCIe lanes (as expected, since the ASUS Pro WS X570-Ace motherboard can connect an AIC to the chipset PCIe slot with a x8 link)

-

There isn’t a bandwith bottleneck since I’m only connecting two NVMe SSDs to each HBA, not using additional drives via the external SAS connectors.

-

All SSDs erased, initialized with GPT, a single large partition with NTFS/4 kBytes per sector

-

Open-air test bench setup, the HBAs are cooled with a 140 mm Noctua fan (reported temperature around 50 °C), same goes for the SSDs (reported temperature below 40 °C), so there is no thermal throttling.

-

Latest BIOS 1302 is installed on the ASUS Pro WS X570-Ace

-

The CPU+Motherboard+RAM (128 GB ECC DDR4-2666) platform performs as expected when looking at CPU speed.

Here are the benchmark results (only listed the results with the HBA directly connected to CPU PCIe lanes for potentially the best results the HBA can offer, the results for the HBA in the X570 chipset slot are very similar):

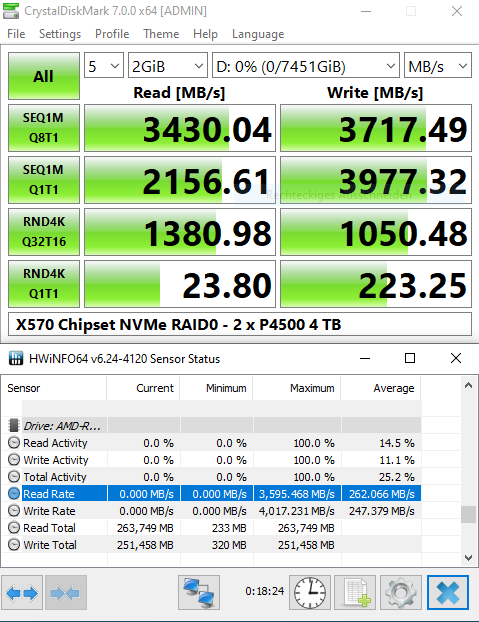

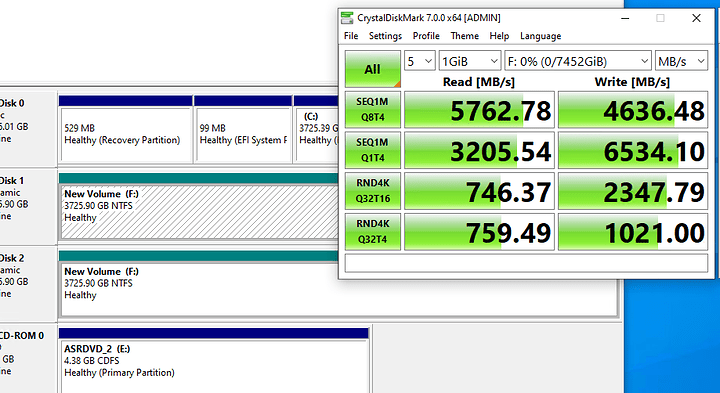

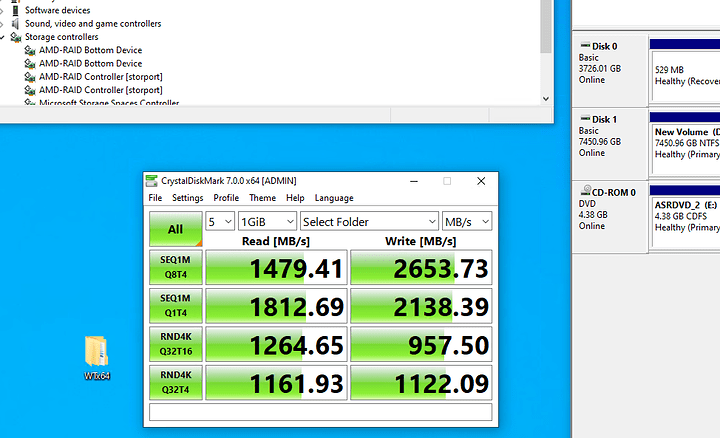

1) P4500 4 TB results with X570 chipset:

[Read]

Sequential 1MiB (Q= 8, T= 1): 3371.715 MB/s [ 3215.5 IOPS] < 2486.20 us>

Sequential 1MiB (Q= 1, T= 1): 971.740 MB/s [ 926.7 IOPS] < 1078.45 us>

Random 4KiB (Q= 32, T=16): 1793.357 MB/s [ 437831.3 IOPS] < 1168.26 us>

Random 4KiB (Q= 1, T= 1): 24.793 MB/s [ 6053.0 IOPS] < 164.99 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 2051.279 MB/s [ 1956.3 IOPS] < 4081.96 us>

Sequential 1MiB (Q= 1, T= 1): 1974.290 MB/s [ 1882.8 IOPS] < 530.66 us>

Random 4KiB (Q= 32, T=16): 1961.160 MB/s [ 478798.8 IOPS] < 1068.35 us>

Random 4KiB (Q= 1, T= 1): 228.774 MB/s [ 55853.0 IOPS] < 17.79 us>Profile: Default

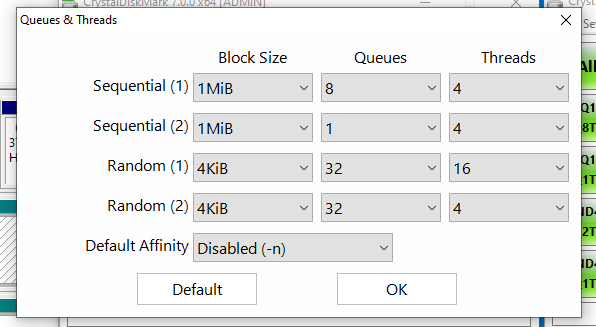

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 1:02:39

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel DC P4500 4 TB/PCIe 3.0 x4 (U.2) via X570 Chipset

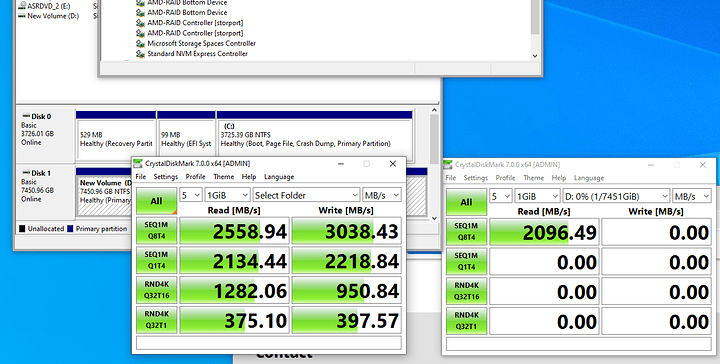

2) P4500 4 TB results on port 0 of a Broadcom HBA 9400 8i8e:

[Read]

Sequential 1MiB (Q= 8, T= 1): 394.730 MB/s [ 376.4 IOPS] < 21179.58 us>

Sequential 1MiB (Q= 1, T= 1): 1284.536 MB/s [ 1225.0 IOPS] < 815.78 us>

Random 4KiB (Q= 32, T=16): 1467.239 MB/s [ 358212.6 IOPS] < 1428.24 us>

Random 4KiB (Q= 1, T= 1): 23.763 MB/s [ 5801.5 IOPS] < 172.15 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 1283.654 MB/s [ 1224.2 IOPS] < 6520.17 us>

Sequential 1MiB (Q= 1, T= 1): 1146.157 MB/s [ 1093.1 IOPS] < 914.24 us>

Random 4KiB (Q= 32, T=16): 1915.610 MB/s [ 467678.2 IOPS] < 1093.78 us>

Random 4KiB (Q= 1, T= 1): 167.853 MB/s [ 40979.7 IOPS] < 24.30 us>Profile: Default

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 1:59:41

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel DC P4500 4 TB/HBA 9400 8i8e P0 (x8 via CPU)

3) P4500 4TB results on port 1 of a Broadcom HBA 9400 8i8e:

[Read]

Sequential 1MiB (Q= 8, T= 1): 565.320 MB/s [ 539.1 IOPS] < 14798.60 us>

Sequential 1MiB (Q= 1, T= 1): 1328.433 MB/s [ 1266.9 IOPS] < 788.93 us>

Random 4KiB (Q= 32, T=16): 1481.024 MB/s [ 361578.1 IOPS] < 1414.98 us>

Random 4KiB (Q= 1, T= 1): 24.026 MB/s [ 5865.7 IOPS] < 170.27 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 1187.603 MB/s [ 1132.6 IOPS] < 7046.48 us>

Sequential 1MiB (Q= 1, T= 1): 1103.407 MB/s [ 1052.3 IOPS] < 949.69 us>

Random 4KiB (Q= 32, T=16): 1816.169 MB/s [ 443400.6 IOPS] < 1153.77 us>

Random 4KiB (Q= 1, T= 1): 166.331 MB/s [ 40608.2 IOPS] < 24.51 us>Profile: Default

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 1:02:06

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel DC P4500 4 TB/HBA 9400 8i8e P1 (x8 via CPU)

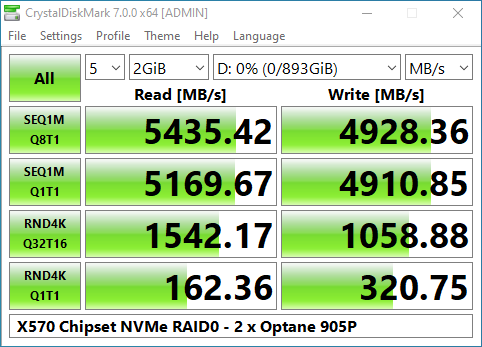

Contrasting results with Optane 905P SSDs:

4) 905P 480 GB results with X570 chipset:

[Read]

Sequential 1MiB (Q= 8, T= 1): 2783.138 MB/s [ 2654.2 IOPS] < 3012.56 us>

Sequential 1MiB (Q= 1, T= 1): 2553.412 MB/s [ 2435.1 IOPS] < 410.43 us>

Random 4KiB (Q= 32, T=16): 2434.875 MB/s [ 594451.9 IOPS] < 860.40 us>

Random 4KiB (Q= 1, T= 1): 228.289 MB/s [ 55734.6 IOPS] < 17.83 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 2486.836 MB/s [ 2371.6 IOPS] < 3367.93 us>

Sequential 1MiB (Q= 1, T= 1): 2318.443 MB/s [ 2211.0 IOPS] < 451.93 us>

Random 4KiB (Q= 32, T=16): 2478.988 MB/s [ 605221.7 IOPS] < 845.04 us>

Random 4KiB (Q= 1, T= 1): 206.504 MB/s [ 50416.0 IOPS] < 19.72 us>Profile: Default

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 2:00:29

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel Optane 905P 480GB/PCIe 3.0 x4 (U.2) via X570 Chipset

5) 905P 480 GB results on port 0 of a Broadcom HBA 9400 8i8e:

[Read]

Sequential 1MiB (Q= 8, T= 1): 2748.844 MB/s [ 2621.5 IOPS] < 3049.99 us>

Sequential 1MiB (Q= 1, T= 1): 2004.216 MB/s [ 1911.4 IOPS] < 522.90 us>

Random 4KiB (Q= 32, T=16): 2429.355 MB/s [ 593104.2 IOPS] < 836.27 us>

Random 4KiB (Q= 1, T= 1): 175.765 MB/s [ 42911.4 IOPS] < 23.19 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 2232.759 MB/s [ 2129.3 IOPS] < 3750.86 us>

Sequential 1MiB (Q= 1, T= 1): 1619.994 MB/s [ 1544.9 IOPS] < 646.81 us>

Random 4KiB (Q= 32, T=16): 2128.465 MB/s [ 519644.8 IOPS] < 984.38 us>

Random 4KiB (Q= 1, T= 1): 155.891 MB/s [ 38059.3 IOPS] < 26.15 us>Profile: Default

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 1:00:54

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel Optane 905P 480 GB/HBA 9400 8i8e P0 (x8 via CPU)

6) 905P 480 GB results on port 1 of a Broadcom HBA 9400 8i8e:

[Read]

Sequential 1MiB (Q= 8, T= 1): 2746.736 MB/s [ 2619.5 IOPS] < 3052.52 us>

Sequential 1MiB (Q= 1, T= 1): 1988.874 MB/s [ 1896.7 IOPS] < 526.92 us>

Random 4KiB (Q= 32, T=16): 2429.357 MB/s [ 593104.7 IOPS] < 835.73 us>

Random 4KiB (Q= 1, T= 1): 175.590 MB/s [ 42868.7 IOPS] < 23.21 us>[Write]

Sequential 1MiB (Q= 8, T= 1): 2229.133 MB/s [ 2125.9 IOPS] < 3756.48 us>

Sequential 1MiB (Q= 1, T= 1): 1615.661 MB/s [ 1540.8 IOPS] < 648.56 us>

Random 4KiB (Q= 32, T=16): 2123.493 MB/s [ 518430.9 IOPS] < 986.68 us>

Random 4KiB (Q= 1, T= 1): 155.849 MB/s [ 38049.1 IOPS] < 26.16 us>Profile: Default

Test: 1 GiB (x5) [Interval: 5 sec] <DefaultAffinity=DISABLED>

Date: 2020/04/22 1:59:11

OS: Windows 10 Enterprise [10.0 Build 18363] (x64)

Comment: Intel Optane 905P 480 GB/HBA 9400 8i8e P1 (x8 via CPU)

Any ideas? I’m dumbfounded.

Thanks for any advice!

Regards,

aBavarian Normie-Pleb

1_CDM_X570_U2(3.0 x4)_NVMe_DC_P4500_4TB.txt (2.5 KB)

2_CDM_HBA9400_P0_NVMe_DC_P4500_4TB.txt (2.5 KB)

3_CDM_HBA9400_P1_NVMe_DC_P4500_4TB.txt (2.5 KB)

4_CDM_X570_U2(3.0 x4)_NVMe_Optane_905P_480GB.txt (2.5 KB)