so i’ve read several reviews about the new intel 12th gen and is massive power draw numbers when overclocked at 100% load, but the spec nobody is talking about is idle power draw numbers when not using a discrete gpu. like just cpu, mobo, ram, and os drive all ran at stock speeds/xmp speeds. personally i’d love to know these numbers so i can plan my home server upgrades.

People are more interested on what it can do than what it does in idle.

We’ll see those figures at some point, but dont expect it to be the first breaking news about Intels new CPUs.

But there doesn’t seem to be ECC support, so that might be interesting to know for your home server.

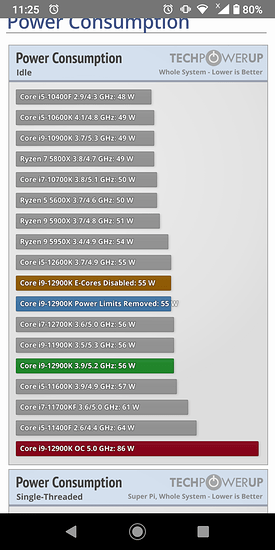

There are quite a few idle powerdraw numbers… Techpowerup always gives multiple numbers, idle, single core, multicore, stress, etc etc…

You want idle, there’s idle.

It’s the same GPU for all systems, so the only difference is the CPU and the mobo/ram cause ddr5…

Whatever the idle power is, the facts are Alder Lake is both powerful and power hungry. Intel just isn’t efficient. Their 10nm process is super bad, even if their architecture is not…

Haven’t seen an OC run yet. But approaching 250W on 100% stock, is finger-food friendly

Idle or loaded, that 12900K is a massive heater. Most of the reviews I watched yesterday tested the venerable Noctua NH-12(?) cooler and 360 AIO’s. The AIO’s still barely kept the 12900k under throttling under stock conditions. AIO’s are going to have to redesign their sytems with better pumps, better fin efficiency, better fans, or something.

It might be a beast of a compute unit, but with that much heat output, it’s definitely a no go for most people unless they have an a/c unit pointed right at it.

I am happy for the competition and glad that Intel is in the game, but yeah… the heat they pump out is just not competitive with AMD, and the delta in performance is simply not worth it for me to switch any time soon.

12c/24t is already more than I need.

Based on the leaks that Moore’s Law is Dead on youtube put out earlier this week on the upcoming AMD chips, it will be a massive blow to Intel again but at a lower power draw and efficiency boost. That Zen 4D model is looking really interesting.

its nearly winter so I have not mowed my lawn yet meaning I have not listened to the most recent pod cast yet

I don’t listen to the podcast, not enough time most of the time, but I do catch his Youtube blurbs occasionally. In this last one, he mentioned the guy that does the AMD overclock utility, who we know works very closely with AMD, and he blurbed a thing about release timeframe for a new CPU and some things on the chips themselves.

fingers crossed we get another AM4 that is X570 compatible, I dont expect it but man it would be nice.

Zen 3D with V-Cache is expected to be released in 2022.

It should be compatible with AM4 and X570. AMD markets 15% IPC uplift.

This could be much more interesting for a server application as OP wants. AMD is much more energy efficient right now.

According to the AMD sit-down from a couple weeks ago, didn’t they say there would be 2 releases in 2022? 3D cache at the beginning, and Zen 4 at the end of the year?

I did not watch it so I cannot comment on Zen 4. If OP wants to plan his/hers home server upgrades I think it is worth noting, that Zen 4 will be on 5nm process, so even less power draw? Zen 3D will be still on 7nm. I think for energy-conscious ppl AMD makes more sense. Or even a Mac Mini M1

right now my lab/cluster is running mostly low voltage Xeon and while I am not looking to change because I dont need more and RAM tends to be my limitation more than CPU speed. It would be awesome if I could get a bunch of low power CPU’s and DDR4 based systems to transition over to.

I am a big fan of home servers being used enterprise just because the cost and support are awesome. Plus EEC is really nice to have.

I used to like the idea of using old enterprise hardware at home. But over the years, I changed my opinion. They are big, heavy, and most importantly loud and consume a lot of power compared to modern hardware for home usage. Server’s are designed to run stuff 24/7 and not idle very much. At home, there may be some setups that are run similar to a business data center, but what happens most of the time is that the servers are accessed heavily during a certain period, then are basically idle for the rest of the time (some exceptions being security cameras, home automation and their associated storage boxes).

For home, I would like to see more designs to rack-mount and passively or very quietly push air to cool SBCs. There are Pi rack-mounting hardware available (both aluminum and 3d printed brackets), some better than others, some that put all the IO to the front. Homelabs don’t need to be bigger than a switch 12U rack, with most not needing more than 6U.

Not many people want to invest in backup power generators, so I believe homelabs should be designed to be resilient against reboot and automatically shutdown when a power outage happens (obviously, UPSes are still needed to keep them alive until they shutdown). They should also have a schedule to power off at certain times and either auto-start at certain hours (if BIOS has that kind of support) or WoL power back on at a defined time.

ECC is always nice to have, but not required on all the equipment in a homelab. I do hope we’ll see ECC memory become the norm in the future. I’d argue that ECC should only be used on the storage and backup servers, but even those can run without, just that it’s better if you have it.

for me its a combo of things, most consumer options are limited to 128gb of RAM and only 4 sticks which drastically increases the cost of the memory.

Right now in my cluster I have about 512gb of RAM most of which is DDR3 EEC but still it lets me run lots of services. To hit that on consumer parts today I would need to spend around $1300 just for the ram, and it would take a minimum of 4 PC’s so figure another $400 for motherboards and depending on CPU’s if we assume Ryzen 5700G another $1200 in CPUs.

All in that puts me at about $3000 to get equivalent number of cores/thread + ram.

Now since im not buying used enterprise, that means im not getting any hot swap disk backplanes, not getting any multi channel SAS controllers, not getting redundant hotswap PSU’s…

The advantages for used enterprise gear just keep going up for MY use case.

Now if all I was doing was a single server that as just acting as a NAS + Plex or something simple like that, sure, cheap mobo, cheap cpu, 16-32g of ram, and call it a day in a cheap case w/ PSU.

My whole rack when running full tilt is still under 15amps for 64c/128t w/ 512gb ram, and 144tb of raw storage.

Yes I could build that into 1 epic server, but not for an affordable cost next to used enterprise gear.

Yep, I did say some home labs will run like business data centers. Yours is also the case.

Just out of curiosity, what are you running that demands 512 GB of RAM, 64 cores and 144 TB of raw storage?

I can’t imagine myself going over 128 GB of RAM and 12 cores / 24 threads with all the VMs I’m thinking to run and that will be just a test lab, I don’t intend to run any “production” workloads on them, just messing around. Things like testing new distros, doing a local domain with a centralized AAA server, maybe test software for potential malware in a contained environment and possibly test out software tools like juju, maas or terraform. And doing stuff for K8s certification, which I think I should get. If I feel generous, I might even borrow some compute power to some FOSS projects for free (as long as they don’t mine crypto or something).

I actually want an exercise of minimalism on my production workloads and try out mostly containers (LXD) and distributed services (LXD cluster) on really, really low-end hardware, ARM SBCs to be precise. Things would include DNS, wireguard, mail server, certificate authority server, syslog, prometheus, grafana, git server, linux distros local repo (which will need a lot of storage) and outside of containers, but still on SBCs, a ceph cluster.

I honestly dont need that much, I could probably get away these days with a 3-4 micro cluster and a decent disk shelf.

I mostly like playing with HA and to do that right I need more than 1 PC, and I need at least 1 disk per system and its just simpler to do all that on enterprise gear for me.

The reason I have so much RAM is so all my nodes are identical and can run all my services if I were to lose more than 1. Plus I have a spare system with bulk storage to be part of my 3:2:1 solution until I can get my Tape library up and going.

I dont have a home generator or solar yet, but I want all that for other reasons, not just to help keep my rack up and going. I also work from home so HA internet and power is important to doing my job.

If I were going for minimalism I would 100% go with used prebuilt micro PC’s + a consumer NAS system. You can find off lease hardware for reasonable prices that get you cpu, ram, and sometimes even storage for under $500/e. A home setup could be primary/redundant + NAS for storage. All that can fit on a literal shelf and would use maybe 200w total.

2 of those gets you 12c/24t, 16gb ram (upgrade to 32 cheap or 128 total), and 256g storage.

this would be a nice minimal setup with some level of redundancy though your data would not have a backup.

bulk, new for under $600

Alder Lake: taking hints from Bulldozer